Analyzing the output. Kafka Producer Using Java. Kafka provides a Java API. The signature of send () is as follows. 3. The following steps can be followed in order to publish JSON messages to Apache Kafka: Go to spring initializr and create a starter project with following dependencies: Spring Web. const producer = kafka.producer () await producer.connect () await producer.send ( { topic: 'topic-name' , messages: [ { key: 'key1', value: 'hello world' }, { key: 'key2', value: 'hey hey!'. } topics. This quickstart uses the new The caveat is that if the application is producing a lower volume of data then the batch.size may not be reached within the linger.ms timeframe. Spring Boot Kafka JSON Message: We can publish the JSON messages to Apache Kafka through spring boot application, in the previous article we have seen how to send simple string messages to Kafka. For information about how to create a message flow, see Creating a message flow. Deploy the application and test. Provided with Professional license. You can publish (produce) JSON or Avro serialized messages to a Kafka topic using User Interface or Automation Script. In both cases you have options to provide a message content or putting a message in the Context, containing content, headers and a key. Generally, producer applications publish events to Kafka while consumers subscribe to these events in order to read and process them. This topic outlines the formats of the messages sent from Gateway Hub to the downstream Kafka instance. Opening a Topic The first step in publishing messages to a topic is to instantiate a portable *pubsub.Topic for your service. We are publishing messages in the Kafka topic, we had also configured a consumer to verify the messages are flowing to the topic. The highlighted text represents that a 'broker-list' and a 'topic id' is required to produce a message. Step3: After knowing all the requirements, try to produce a message to a topic using the command: 'kafka-console-producer -broker-list localhost:9092 -topic

When the above command is executed successfully, you will see a message in your command prompt saying, Created Topic Test .. The KafkaProducer class provides an option to connect a Kafka broker in its constructor with the following methods. Image Source. Type your message in a single line. Kafka Producer Using Java. A set of partitions forms a topic, being a feed of messages.  An Apache Kafka Adapter configured to: Publish records to a Kafka topic. A mapper to perform appropriate source-to-target mappings between the Apache Kafka Adapter and FTP Adapter. Purview will receive it, process it and notify Kafka topic ATLAS_ENTITIES of entity changes. The method send is used to publish messages to the Kafka cluster. Technologies: Spring Boot 2.1.3.RELEASE; Unable to publish messages morethan 1MB in Kafka topic. producer.send (new ProducerRecord

An Apache Kafka Adapter configured to: Publish records to a Kafka topic. A mapper to perform appropriate source-to-target mappings between the Apache Kafka Adapter and FTP Adapter. Purview will receive it, process it and notify Kafka topic ATLAS_ENTITIES of entity changes. The method send is used to publish messages to the Kafka cluster. Technologies: Spring Boot 2.1.3.RELEASE; Unable to publish messages morethan 1MB in Kafka topic. producer.send (new ProducerRecord

Perhaps the ordinary way to produce messages to the Kafka is through the standard Kafka clients. Publish messages to a Pub/Sub Lite topic using a shim for the Kafka Producer API. Kafka uses topics to store and categorize these events, e.g., in an e-commerce application, there could be an orders' topic. .NET Client Installation. The easiest way to do so is to use pubsub.OpenTopic and a service-specific URL pointing to the topic, making sure you blank import the driver package to We The following steps can be followed in order to publish JSON messages to Apache Kafka: Go to spring initializr and create a starter project with following dependencies: Spring Web. enable: It will help to enable the delete topic. Then you can use rd_kafka_produce to send messages to it. Procedure. enable: It will help to create an auto-creation on the cluster or server environment. Step 4: Send some messages Kafka comes with a command line client that will take input from a file or standard in and send it out as messages to the Kafka cluster. For Kafka, the messages are nothing but a simple array of bytes. Type. This demonstrates that the Kafka connector publishes messages to Kafka brokers. This article defines the key components and the setup required to publish an avro schema based serialized message to the Kafka topic. For creating a new Kafka Topic, open a separate command prompt window: kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test. You can get all the Kafka messages by using the following code snippet. Publishing to a Kafka Topic CloverDX bundles KafkaWriter component that allows us publish messages into a Kafka topic from any data source supported by the platform. To write messages to Kafka, use either the ProduceAsync or Produce method. The Pub/Sub Lite Kafka Shim is a Java library that makes it easy for users of the Apache Kafka Java client library to work with Pub/Sub Lite. You can pass topic-specific configuration in the third argument to rd_kafka_topic_new.The previous example passed the topic_conf and seeded with a configuration for acknowledgments. Complete the following steps to use IBM Integration Bus to publish messages to a topic on a Kafka server: Create a message flow containing an input node, such as an HTTPInput node, and a KafkaProducer node. We had followed the configuration suggested in the 3 tutorials over the subject released in pega 7.3. Each time you click to enter a new message is submitted. Complete the following steps to use IBM App Connect Enterprise to publish messages to a topic on a Kafka server: Create a message flow containing an input node, such as an HTTPInput node, and a KafkaProducer node. It achieves this by Step 1: let's start our Kafka producer. I'm using kafka.net dll and created topic successfully with this code: Uri uri = new Uri ("http://localhost:9092"); string topic = "testkafka"; string payload = "test msg"; var sendMsg = The caveat is that if the application is producing a lower volume of data then the batch.size may not be reached within the linger.ms timeframe. The signature of send () is as follows. Kafka In-Memory Publishing a message to a topic with the Go CDK takes two steps: Open a topic with the Pub/Sub provider of your choice (once per topic). The task expects an input parameter named kafka_request as part of the task's input with the following details: "topic" - Topic to publish. First, you will need a Kafka cluster. Overview. Using this, we can write a Java program that acts as a Kafka producer.

Here, we can use the different key combinations to store the data on the specific Kafka partition. Messages are sent in a JSON format and contain normalised metric and event data. Kafka v0.11 introduces record headers, which allows your messages to carry extra metadata. Navigate to the root directory of the Kafka package and run the following commands. To create a Kafka topic named "sampleTopic", run the following command. Finally, to create a Kafka Producer, run the following: The Kafka producer created connects to the cluster which is running on localhost and listening on port 9092. The second argument to rd_kafka_produce can be used to set the desired partition for the message. Here, we can use the different key combinations to store the data on the specific Kafka partition.

Spring Boot Kafka Producer Example: On the above pre-requisites session, we have started zookeeper, Kafka server and created one hello-topic and also started Kafka consumer console. If you dont have one already, just head over to the Instaclustr console and create a free Kafka cluster to test this with. For Windows: .\bin\windows\kafka-console-consumer.bat bootstrap-server localhost:9092 topic topic_name from-beginning. 2. topic. My first event My second event My third event. Full step by step instructions to deploy a cluster can be found in this document. This article defines the key components and the setup required to publish an avro schema based serialized message to the Kafka topic. Were going to explain KafkaWriter configuration on an example that generates random dataset, produces a single Kafka message, and writes it out to a Kafka Topic using a KafkaWriter. Kafka Consumer example.

Perhaps the ordinary way to produce messages to the Kafka is through the standard Kafka clients. Run the following command to verify the messages: bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic test --from-beginning. But if you want to just produce text messages to the Kafak, there are simpler ways.In this tutorial I ll show you 3 ways of sending text messages to the Kafka. The message format is json and binary. Describes newly created topic by running following command with option .describe topic This command returns leader broker id, replication factor and partition details of the topic. What tool did we use to send messages on the command line? Kafka provides a Java API. Message payloads - metrics. What tool do you use to see topics? Step3: After knowing all the requirements, try to produce a message to a topic using the command: 'kafka-console-producer -broker-list localhost:9092 -topic

The KafkaProducer class provides an option to connect a Kafka broker in its constructor with the following methods. Step 1: let's start our Kafka producer. Alternatively you can also use the consume operation to consume the published message. The method send is used to publish messages to the Kafka cluster. What tool do you use to create a topic? For information about how to create a message flow, see Creating a message flow. enable: It will help to create an auto-creation on the cluster or server environment. What tool do you use to see topics? Resources. Kafka publishing message formats. A managed event hub is created automatically when your Microsoft Purview account is created. Image Source. Were going to explain KafkaWriter configuration on an example that generates random dataset, produces a single Kafka message, and writes it out to a Kafka Topic using a KafkaWriter. KafkaProducer class provides send method to send messages asynchronously to a topic. The Kafka message is a small or medium piece of the data. 2. Here we will see how to send Spring Boot Kafka JSON Message to Kafka Topic using Kafka Template. At a later stage version 2 of the connector support is added for publishing of events to Kafka: There are many Kafka clients for C#, a list of some recommended options to use Kafka with C# can be found here. In this example, well be using Confluents kafka-dotnet client. Add the Confluent.Kafka package to your application. This package is available via NuGet. When we open the failed messages it shows a blank xml (pic 3) Can you please Command-line client to publish and consume messages from a Kafka topic. My first event My second event My third event. The task expects an input parameter named kafka_request as part of the task's input with the following details: "topic" - Topic to publish. Complete the following steps to use IBM App Connect Enterprise to publish messages to a topic on a Kafka server: Create a message flow containing an input node, such as an HTTPInput node, and a KafkaProducer node. Here we will see how to send Spring Boot Kafka JSON Message to Kafka Topic using Kafka Template. Apache Kafka is a powerful, high-performance, distributed event-streaming platform. Examples However when the data flow is run all the messages are going to the failed state (pic2). confluent-kafka-dotnet is made available via NuGet.Its a binding to the C client librdkafka, which is provided automatically via the dependent librdkafka.redist package for a number of popular platforms (win-x64, win-x86, debian-x64, rhel-x64 and osx). Generally, producer applications publish events to Kafka while consumers subscribe to these events in order to read and process them. Install kafka-node library in your NodeJS project, with this command: npm i kafka-node. It is because a producer must know the id of the topic to which the data is to be written. Initiate your NodeJS project, with this command: npm init. I'm using kafka.net dll and created topic successfully with this code: Uri uri = new Uri ("http://localhost:9092"); string topic = "testkafka"; string payload = "test msg"; var sendMsg = CSV and JSON are supported Avro is considered. Purview will receive it, process it and notify Kafka topic ATLAS_ENTITIES of entity changes. It is because a producer must know the id of the topic to which the data is to be written. Next, well produce some messages to the kafka cluster, using a Producer Builder. 3. There are many Kafka clients for C#, a list of some recommended options to use Kafka with C# can be found here. In this example, well be using Confluents kafka-dotnet client. Add the Confluent.Kafka package to your application. This package is available via NuGet.

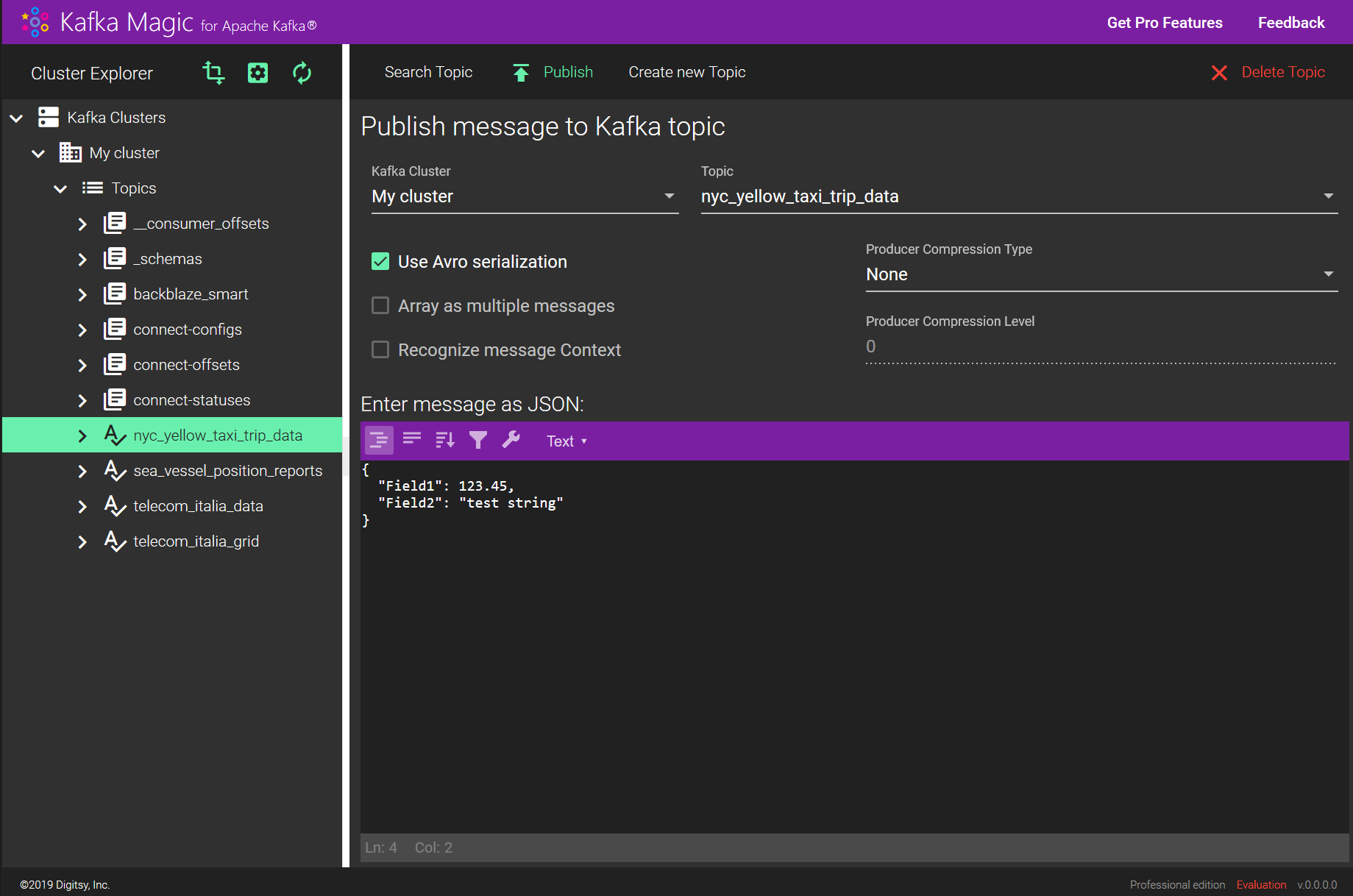

topics. Each time you click to enter a new message is submitted. Producer Creating and sending messages to consumers. Send messages on the topic. See, Purview account creation. Spring Boot Kafka JSON Message: We can publish the JSON messages to Apache Kafka through spring boot application, in the previous article we have seen how to send simple string messages to Kafka. Not receiving the messages published in Kafka Apache topic. Kafka Consumer example. Kafka publishing message formats. I want to call a method with send a kafka message to a topic inside a Future based on Failure scenario without using blocking call to maintain scalability of my application. Press Apache Kafka is a powerful, high-performance, distributed event-streaming platform. Provided with Professional license. You can publish (produce) JSON or Avro serialized messages to a Kafka topic using User Interface or Automation Script. In both cases you have options to provide a message content or putting a message in the Context, containing content, headers and a key. The following table describes some of the header fields; this list is not comprehensive. 2. Open the project in an IDE and sync the dependencies. Create a producer.js file in your root project, and lets us initiate connection into your Apache Kafka Service, with this code : Initiate connection into Apache Kafka Service. I'm new to kafka and I want to try create topic and send message to kafka from my .net application. Call it producer. This topic outlines the formats of the messages sent from Gateway Hub to the downstream Kafka instance. Here we will see how to send Spring Boot Kafka JSON Message to Kafka Topic using Kafka Template. For information about how to create a message flow, see Creating a message flow. Run the following command to verify the messages: bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic test --from-beginning.

Producer Creating and sending messages to consumers. 3. Question. The producer created in the kafka task is cached. .\bin\windows\kafka-console-producer.bat --broker-list localhost:9092 --topic test-topic. open new cmd to send messages. Kafka provides a Java API. Type your message in a single line. Publishing to a Kafka Topic CloverDX bundles KafkaWriter component that allows us publish messages into a Kafka topic from any data source supported by the platform. enable: It will help to enable the delete topic. 1. create. kafka-topics.sh. topics. What tool did we use to send messages on the command line? We had published messages with incremental values Test1, Test2. This topic outlines the formats of the messages sent from Gateway Hub to the downstream Kafka instance. I'm new to kafka and I want to try create topic and send message to kafka from my .net application. You can publish messages to Event Hubs Kafka topic, ATLAS_HOOK. The test connectivity works fine in the data set (pic1). I'm using kafka.net dll and created topic successfully with this code: Uri uri = new Uri ("http://localhost:9092"); string topic = "testkafka"; string payload = "test msg"; var sendMsg = The highlighted text represents that a 'broker-list' and a 'topic id' is required to produce a message. Passing NULL will cause the producer to use the default configuration.. With librdkafka, you first need to create a rd_kafka_topic_t handle for the topic you want to write to. Opening a Topic. 1. create. If a partition is specified in the message, use it; If no partition is specified but a key is present choose a partition based on a hash (murmur2) of the key; If no partition or key is present choose a partition in a round-robin fashion; Message Headers. 2. for Key 1, event message will be published to partition 1, for key 2, event message will be published to partition 2 and so on. But if you want to just produce text messages to the Kafak, there are simpler ways.In this tutorial I ll show you 3 ways of sending text messages to the Kafka. The format of the Kafka message payload is described to the database through a table definition: each column is mapped to an element in the messages. Complete the following steps to use IBM App Connect Enterprise to publish messages to a topic on a Kafka server: Create a message flow containing an input node, such as an HTTPInput node, and a KafkaProducer node. Open the project in an IDE and sync the dependencies. Conclusion. Generally, producer applications publish events to Kafka while consumers subscribe to these events in order to read and process them. Producer Creating and sending messages to consumers. Producing Messages. > bin/kafka-list-topic.sh --zookeeper localhost:2181 Alternatively, you can also configure your brokers to auto-create topics when a non-existent topic is published to. Type your messages and click enter to publish them to the Kafka topic. But if you want to just produce text messages to the Kafak, there are simpler ways.In this tutorial I ll show you 3 ways of sending text messages to the Kafka. What tool do you use to create a topic? You publish messages to topics in Kafka. A partition can have different consumers, and they access to the messages using its own offset. The first step in publishing messages to a topic is to instantiate a portable *pubsub.Topic for your service.

Figure 1: Producer message batching flow. Producers publish messages into Kafka topics. This is going to publish messages to Kafka. Consider below diagram where there is one Kafka topic with five; Event Producer produces events and publish to partition as per the message context E.g. A Kafka Publish task is used to push messages to another microservice via Kafka. Kafka publishing message formats. In the above example, we are consuming 100 messages from the Kafka topics which we produced using the Producer example we learned in the previous article. enable: It will help to enable the delete topic. Kafka stores the messages in a topic. Resources. Next, let's auto wire the coffered template for water-based spring, which provides convenience methods to publish to Kafka. No labels. The easiest way to do so is to use pubsub.OpenTopic and a service-specific URL pointing to the topic, making sure you blank import the driver package to Specify the message structure to use (for this example, an XML schema (XSD) document) and the headers to use for the message. This quickstart uses the new Passing NULL will cause the producer to use the default configuration.. Summary of what this article will cover: Then we have defined the tools required to publish an avro based message to the Kafka topic along with the reference code . .\bin\windows\kafka-console-producer.bat --broker-list localhost:9092 --topic test-topic. If set to The modern-cpp-kafka project on GitHub has been thoroughly tested within Morgan Stanley. I'm new with kafka and I'm trying to publish data from external application via http but I cannot find the way to do this. The highlighted text represents that a 'broker-list' and a 'topic id' is required to produce a message. First, you will need a Kafka cluster. It is because a producer must know the id of the topic to which the data is to be written. I want to call a method with send a kafka message to a topic inside a Future based on Failure scenario without using blocking call to maintain scalability of my application.