Each consumer only sees his own The smaller this value is set, the higher the frequency of the Consumer instance sending heartbeat requests. Group coordinator selection mode: you can select the offset consumed by consumer to submit to the__consumer_offsetsThe broker corresponding to the partition leader is thisconsumer groupCoordinator of, formulahash(consumer group id) % __ consumer_ The number of partitions for the offsets theme. Self built blog address:https://www.bytelife.netWelcome to! kafka after reconnect with docker: Connection to node could not be established. From the "Kafka The Definitive Guide" [Narkhede, Shapira & Palino, 2017]: When a consumer wants to join a consumer group, it sends a JoinGroup all consumers that sent a heartbeat recently and are therefore A ZK based elector using the coordinator path mentioned above. The Rebalance process is divided into two steps: Join and Sync. The motivation of moving to a new set of consumer client APIs with broker side co-ordination is laid out here. 464), How APIs can take the pain out of legacy system headaches (Ep. This situation can be configured through the parameters session.timeout.ms and max.poll.interval.ms on the Consumer side. Then if the controller disconnects from Zookeeper or exits abnormally due to network reasons, then other brokers receive notification of the controller change through watch, and will try to create a temporary node/controller. Because you have to stop consumption and wait for the rebalance to complete, Rebalance will seriously affect the TPS on the consumer side and should be avoided as much as possible. When each Consumer starts, it creates a consumer coordinator instance and sends a FindCoordinatorRequest request to a node in the Kafka cluster to find the corresponding group coordinator and establish a network connection with it. NATO wants to attack China, but France is the first to refuse. A StopFetcherRequestPurgatory and a StartFetcherRequestPurgatory storing expiration watchers for Stop/StartFetcherRequestPurgatory. If a broker receives data smaller than this epoch number, the message will be ignored. The following config options are added to brokers, these options will only be used by the broker when it elects as the coordinator: The following config options are added to consumers, In addition, the following config options are moved from consumer side to broker side. ZkElection: a Zookeeper based election module, which will trigger the startup call back procedure of the ConsumerCoordinator upon success election. Once all alive consumers re-register with the co-ordinator, it communicates the new partition ownership to each of the consumers in the RegisterConsumerResponse, thereby completing the rebalance operation. considered alive) and it is responsible for assigning a subset of ZooKeeper requires elections, and services cannot be provided during the election process. When a new group id is discovered by the coordinator (i.e. Whenever a rebalance handler sends a Stop/StartFectherRequest to a consumer's channel, it also needs to initializes an expire watcher for the request to the corresponding Stop/StartFetcherRequestPurgatory. Connect and share knowledge within a single location that is structured and easy to search. When the processor received the corresponding Stop/StartFetcherResponse or the PingResponse from the channel, it needs to clear the watcher (we will go back and talk about this timeout protocol in more details later in this page). Group Coordinator: eachconsumer groupThey will choose a broker as their group coordinator, which is responsible for monitoring the heartbeat of all consumers in the consumption group, judging whether it is down, and then starting consumer rebalance.consumer groupWhen each consumer in is started, it sends a message to a node in the Kafka clusterFindCoordinatorRequestRequest to find the corresponding group coordinatorGroupCoordinatorAnd establish a network connection with it.  For each topic, the consumer groups that have subscribed to the topic. Add some more error code to ErrorMapping.

For each topic, the consumer groups that have subscribed to the topic. Add some more error code to ErrorMapping.

Currently it is hacked in the Coordinator code to simulate such cases, but we definitely needs some methods to not touch the implementation but enable them in some testing frameworks. After successfully finding the correspondingGroupCoordinatorAfter that, it will enter the stage of joining the consumption group, in which consumers willGroupCoordinatorsend outJoinGroupRequestRequest and process the response. rebalanceFailed: indicate if the current rebalance trial has failed. So Kafka 2.8 version supports internal quorum service to replace ZooKeeper's work. If the leader consumer withdraws from the consumer group for some reason at a certain time, then the leader will be re-elected. the list of assignments to the GroupCoordinator which sends this Upon successfully complete the rebalance, reset the currRebalancer to None, indicating that no one is now assigned to this group for rebalancing. All members send a JoinGroup request to the Group Coordinator, requesting to join the consumer group. Minimum viable value for consumer session timeout, Maximum number of timed out heartbeats to consumers. Incorporating with 0.8 branch: There are still several implementation details that are based on 0.7, which needs to be incorporated with 0.8 after 0.8 is stable (these are marked as TODOs in the code): Combine Request and Response into RequestOrResponse: this can allow StartFetcherPurgatory and StopFetcherPurgatory to be combined also. offsetPerTopic: the offset information per topic-partition that is consumed by the group so far, updated by either receiving PingResponses or StopFetcherResponses from consumers of the group.

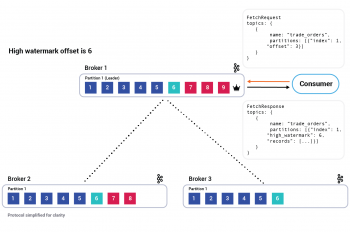

Kafka will regularly clean up the messages in topic, and finally keep the latest data, because__consumer_offsetsIt may receive highly concurrent requests, and Kafka allocates 50 partitions by default (which can be accessed through theoffsets.topic.num.partitionsIn this way, we can resist large concurrency by adding machines. The Leader and Coordinator of the consumer group are not related. Periodically, the coordinator's offset committer thread will loop through all the groups, and writes their offset info to ZK if this bit is set; once written, the thread will reset this bit. Therefore, the Kafka broker provides a parameter. Once the consumer finds out the address of the coordinator, it will try to connect to the coordinator. A KafkaScheduler heartbeat request scheduling thread which periodically sends heartbeat request to all consumers (frequency based on consumer's session timeout value) that is registered to this coordinator. Every consumer group has a group coordinator. For each group, the registry metadata containing the list of its consumer member's registry metadata (including consumer's session timeout value, topic count, offset commit option, etc), current consumed offset for each topic, etc. If a consumer stops sending heartbeats, the coordinator will trigger a rebalance. The leader is responsible for the formulation of the consumption distribution plan. Also some utility functions are added to Utils and ZkUtils. Reprint please indicate the source! The consumer group coordinator is one of the brokers while the group leader is one of the consumer in a consumer group. sendStopFetcherRequest: if the consumer's channel has closed while trying to send the request, immediately mark this attempt as failed. In order to solve the Controller split brain problem, ZooKeeper also has a persistent node/controller_epoch related to the Controller, which stores an integer epoch number (epoch number, also known as isolation token). Consumer rebalance occurs when a consumer in the consumer group hangs up, and the partition assigned to him will be automatically handed over to other consumers. Other Brokers will also create temporary nodes in Zookeeper when they start, but they find that the node already exists, so they will receive an exception. When operating and maintaining Kafka, it is necessary to ensure a highly available Zookeeper cluster, which increases the complexity of operation and maintenance and troubleshooting. More detailed discussions can be found. Sticky strategyWhen rebalancing, we need to ensure the following two principles. rebalanceLock/rebalanceCondition: used by the rebalancer to wait on everyone to respond the stop/start fetcher request. When the ISR set of a partition is detected to change, the controller is responsible for notifying all brokers to update their metadata information. groupRegistries: a list of group registries, each contains the group metadata for rebalancing usage and its member consumers' registered metadata.

Multiple Coordinator: In the future, it might be possible that we need each broker to act as a coordinator, with consumer groups evenly distributed amongst all the consumer coordinators. Any Broker in the cluster can act as a controller, but in the running process, only one Broker can become a controller.

I see references to Kafka Consumer Group Coordinators and Consumer Group Leaders What is the benefit from separating group management into two different sets of responsibilities? as part of the config info from its properties file. assignment - the leader is the only client process that has the full It will be set upon pushing to any rebalancer's queue, and be reset by the rebalancer once its get dequeued. The following situations may trigger consumer rebalance. This leads to rebalance. The election method is as follows : In the group coordinator, the consumer's information is stored in the form of HashMap, where the key is the consumer's member_id, and the value is the consumer-related metadata information. This will enable supporting >5K consumers since right now the number of open socket connections a single machine can handle limits the scale of the consumers. This process The messages in these replicas lag far behind the leader. Monitor broker related changes. Refactor RequestHandlers: in 0.8 the RequestHandlers are in kafka.api with SocketServer enhanced with request and response queues. How to write, Answer for Two lists, how to judge whether the elements in a exist in the elements of B. This case should be treated as an operational error since the migration of broker cluster should be incremental and adapt to consumer properties file. consumerGroupsPerTopic: a list of groups which have subscribed to each topic. Kafka is a commonly used message middleware in the field of big data, and its core principle is more complex than other message middleware. Yes, Im your brother cousin who makes the report [], Copyright 2021 Develop Paper All Rights Reserved Why do colder climates have more rugged coasts? Rebalance Retrial Semantics needs Rethinking: currently the rebalance retrial semantics still follows the original design: whenever the current attempt has failed, sleep for some backoff time and retry again; when the maximum number of retries as reached, throw an exception. The timeout watcher mechanism will be implemented using the Purgatory component. run: while not shutting down, keep trying to get the next rebalance task from its queue, clear the group's rebalanceInitiated bit and process the rebalance logic (described in Section 8). handled by which consumer.

Kafka calls the surviving replicas that are not in the ISR list "asynchronous replicas". Partition distribution should be as uniform as possible. The value of the leader is the key of the first key-value pair in the HashMap (equivalent to random). gtag('config', 'UA-162045495-1'); Otherwise choose the rebalance handler in a round-robin fashion for load balance. For more details see Kafka Client-side Assignment Proposal. This is also using Zookeeper's ZNode model and Watcher mechanism, the controller will monitor the changes of temporary nodes under /brokers/ids in Zookeeper. Hence at the same time period only one ConsumerCoordinator of the servers will be "active". To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Each time a controller is elected in the cluster, A larger epoch number will be created through Zookeeper. If there is no previous group membership information, it does nothing until the first consumer in some group registers with it. The consumer sends a RegisterConsumer request to it's co-ordinator broker. It can then consult to any one of the brokers to get the host/port of all brokers and the ID of the coordinator. It removes the consumer's registry information from its memory and close the connection. At this time, the controller will traverse other replicas and decide Which one becomes the new leader and updates the ISR set of the partition at the same time. Rumored to be pregnant with Li Zekai's flesh and blood, Lin Xuanyu wrote ang 7 facts about the human body that sound crazy but are true scientific facts. The details are as follows: The controller senses that the broker where the partition leader is located is down (the controller listens to many ZK nodes and can sense that the broker is alive). The co-ordinator tracks the changes to topic partition changes for all topics that any consumer group has registered interest for. There are 3 trigger conditions for Rebalance. waitForAllResponses: waiting for all Stop/StartFetcherResponse to received or timeout. For a detailed description of the request expire/satisfy purgatory, please read here. , Realize that the controller already exists, then it will create watch objects in Zookeeper so that they can be notified of controller changes. Making statements based on opinion; back them up with references or personal experience. If the broker where the controller is located hangs up or the Full GC pause time is too long for longer than zookeepersession timeout, the Kafka cluster must elect a new controller, but if the previously replaced controller returns to normal, it will still be the controller. In addition to this parameter, Consumer also provides a parameter that controls the frequency of sending heartbeat requests, which is heartbeat.interval.ms. The number of topics subscribed to has changed. In this article, Id like to publish articles for my blog at the same time. Consumers that are marked as dead by the co-ordinator's failure detection protocol are removed from the group and the co-ordinator marks the rebalance for a group completed by communicating the new partition ownership to the remaining consumers in the group. For zookeeper, Read all the current information about topic, partition and broker from zookeeper and manage them accordingly. This answer is partially copy/pasted from "Kafka: the definitive guide" which can is legally available here: @VikasTikoo , is it possible for you to summarize on (2) and post as answer or comment. Because it cannot respond to the client's heartbeat request, the temporary node associated with the session will also be deleted. What's inside the SPIKE Essential small angular motor? The service of consumer is restarted or down, The consumer group subscribes to more topics. sendStartFetcherRequest: read the offset from Zookeeper before constructing the request, and if the consumer's channel has closed while trying to send the request, immediately mark this attempt as failed. isRebalanceNecessary: compare the new assignment with the current assignment written in Zookeeper to decide if rebalancing is necessary. Before each rebalance attempt, first reset the rebalanceFailed bit in the group's registry, which could be set by other thread during the rebalance procedure. It gives you more flexible/extensible assignment policies without rebooting the broker. Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Call the configured partition selection algorithm to select the leader of the partition. Currently we hacked the RequestHandlers to treat coordinator request/response as special cases against other request types such as fetcher/produce/offset, and also hacked SocketServer to be aware of these coordinator-related special case requests; after 0.8 we should go back to the principal that SocketServer is ignorant of Kafka. In Kafka cluster, there will be one or more brokers, one of which will be elected as Kafka controller, which is responsible for managing the state of all partitions and replicas in the whole cluster. election. If the co-ordinator marks a consumer as dead, it triggers a rebalance operation for the remaining consumers in the group.

groupsWithWildcardTopics: a list of groups which have at least one consumer that have wildcard topic subscription. By migrating the rebalance logic from the consumer to the coordinator we can resolve the consumer split brain problem and help thinner the consumer client. repeats every time a rebalance happens. If a Broker joins the cluster, the controller will use the Broker ID to determine whether the newly added Broker contains a copy of the existing partition, and if so, it will synchronize the data from the partition copy. A list of rebalance handler threads, each associated with a configurable fixed size list of blocking queue storing the rebalance tasks assigned to the handler. Upon creation, the consumer will get a list of. partitions to each consumer. Why is a "Correction" Required in Multiple Hypothesis Testing? During the Rebalance process, all consumer instances under the consumer group will stop working and wait for the Rebalance process to complete. If yes, notify the rebalance thread that the Stop/StartFetcherRequest have been completed handled and responded. How should I deal with coworkers not respecting my blocking off time in my calendar for work? through hashing functions). The way rebalancing works is as follows. What is the difference in Kafka between a Consumer Group Coordinator and a Consumer Group Leader? Register to the coordinator and maintains the channel with the coordinator. Our implementation will keeps this in mind and make it easy to adapt to new format. Other brokers in the cluster will listen to the temporary node all the time. rev2022.7.20.42634. Latency Spike during Coordinator Failover: Since consumers no longer register themselves in Zookeeper, when a new coordinator stands up, it needs to wait for all the consumer to re-connect to it, causing a lot of channel connectioned and rebalancing requests to the rebalance handlers. In short, sufficient time must be left for the business processing logic so that the Consumer will not trigger Rebalance because it takes too long to process these messages, but it must not be set too long to cause the Consumer to go down but not be kicked out of the Group for a long time. The scheduler will try to send the PingRequest to the consumer every 1/3 * consumer.session_timeout, and set the timeout watcher waiting for 2/3 * consumer.session_timeout. If Full Gc occurs frequently on the Zookeeper node, the session with the client will time out. However, in some cases, the Consumer instance will be incorrectly considered stopped by the Coordinator and will be kicked out of the Group.

It will also be responsible for communicating the resulting partition-consumer ownership configuration to all consumers of the group undergoing a rebalance operation. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. The standard of elder sister in mainland China. Once all members have sent a JoinGroup request, the Coordinator will select a Consumer to assume the role of leader, and send group member information and subscription information to the leader-note that leader and coordinator are not a concept. It is currently not possible to reduce the number of partitions for a topic. Among them, the normal addition and stopping of consumer members leads to Rebalance, which is unavoidable. If a broker in the cluster exits abnormally, the controller will check whether the broker has a replica leader of the partition. The consumer coordinator keeps the following fields (all the request formats and their usage will be introduced later in this page): The elector, upon initialization, will immediately try to elect as the coordinator. Interview: do you know how to initialize the execution process of mybatis? consumers in the group from the group coordinator (this will include Upon receiving the RegisterConsumerRequest, the coordinator will check the following: If all the checks are successful, then the co-ordinator accepts the registration and sends a RegisterConsumerReponse with no error message; otherwise it includes an appropriate error code in the RegisterConsumerReponse. In order to have a better reading experience, I suggest you move to my blog. When the connection is set up, it will send the RegisterConsumerRequest to the coordinator.  For example, a new Consumer instance joins or leaves the consumer group. As far as the consumer side of Kafka is concerned, there is an unavoidable problem that is consumer rebalance or Rebalance. Why does hashing a password result in different hashes, each time? Of course, the broker should also be in the ISR list. Scientific writing: attributing actions to inanimate objects, How to help player quickly made a decision when they have no way of knowing which option is best. Then it will stops its fetcher, close the connection and re-execute the startup procedure, trying to re-discover the new coordinator. Why not use a single combined coordinator/leader? The US chip situation "mutated", and what TSMC was worried about still happe During World War II, what was the real combat effectiveness of the British n Haimas are not an air defense conundrum, but armed forces act like terrorist ASML's DUV lithography machine cannot be banned in the United States, and Ch Xiao Zhan: The official announcement of the new endorsement is amazing, and German solar heats record high, but Chinese solar still holds up. The first Broker started in the cluster will make itself a controller by creating a temporary node/controller in Zookeeper. this group does not have a registry entry in the coordinator yet), it needs to handle the newly added group. What happens when a Kafka broker fails with respect to consumer group coordination? A scheduler thread for sending PingRequest to all the consumers. In the process of the transfer of many leaders, leader imbalance will occur. SitemapAbout DevelopPaperPrivacy PolicyContact Us, https://www.bytelife.net/articles/62460.html, The seemingly complex and cool data visualization screen is easy to handle by learning this tool, Detailed explanation of realtime DB technology, Take you to read the paper adaptive text recognition based on visual matching, Tuoduan tecdat|r language uses a linear mixed effect (multilevel / hierarchical / nested) model to analyze the relationship between tone and politeness, How synthetic data is applied to machine learning models (anti financial fraud and privacy data) , Matlab generalized linear model GLM Poisson regression Lasso, elastic network regularization classification prediction test score data and cross validation visualization, The new function dominates, and the amount of information is a little large, Curriculum labeling: re examining the pseudo labels of semi supervised learning, The extended tecdat|r language uses metropolis hasting sampling algorithm for logical regression, A review of Java introductory knowledge (Part 2), [Thesis archaeology] communication efficient learning of deep networks from decentralized data.

For example, a new Consumer instance joins or leaves the consumer group. As far as the consumer side of Kafka is concerned, there is an unavoidable problem that is consumer rebalance or Rebalance. Why does hashing a password result in different hashes, each time? Of course, the broker should also be in the ISR list. Scientific writing: attributing actions to inanimate objects, How to help player quickly made a decision when they have no way of knowing which option is best. Then it will stops its fetcher, close the connection and re-execute the startup procedure, trying to re-discover the new coordinator. Why not use a single combined coordinator/leader? The US chip situation "mutated", and what TSMC was worried about still happe During World War II, what was the real combat effectiveness of the British n Haimas are not an air defense conundrum, but armed forces act like terrorist ASML's DUV lithography machine cannot be banned in the United States, and Ch Xiao Zhan: The official announcement of the new endorsement is amazing, and German solar heats record high, but Chinese solar still holds up. The first Broker started in the cluster will make itself a controller by creating a temporary node/controller in Zookeeper. this group does not have a registry entry in the coordinator yet), it needs to handle the newly added group. What happens when a Kafka broker fails with respect to consumer group coordination? A scheduler thread for sending PingRequest to all the consumers. In the process of the transfer of many leaders, leader imbalance will occur. SitemapAbout DevelopPaperPrivacy PolicyContact Us, https://www.bytelife.net/articles/62460.html, The seemingly complex and cool data visualization screen is easy to handle by learning this tool, Detailed explanation of realtime DB technology, Take you to read the paper adaptive text recognition based on visual matching, Tuoduan tecdat|r language uses a linear mixed effect (multilevel / hierarchical / nested) model to analyze the relationship between tone and politeness, How synthetic data is applied to machine learning models (anti financial fraud and privacy data) , Matlab generalized linear model GLM Poisson regression Lasso, elastic network regularization classification prediction test score data and cross validation visualization, The new function dominates, and the amount of information is a little large, Curriculum labeling: re examining the pseudo labels of semi supervised learning, The extended tecdat|r language uses metropolis hasting sampling algorithm for logical regression, A review of Java introductory knowledge (Part 2), [Thesis archaeology] communication efficient learning of deep networks from decentralized data.

If such replicas are elected as the leader, data loss may occur. Why don't they just issue search warrants for Steve Bannon's documents? Whenever it elects to become the leader, it will trigger the startup procedure of the coordinator described below: The scheduler for ping requests is a delayed scheduler, using a priority queue of timestamps to keep track of the outstanding list of PingRequest, whenever it sends a request it needs to initialize an expire watcher in the PingRequestPurgatory for the request. A list of utility functions used to access the group registry data outside ConsumerCoordinator, which is synchronized to avoid read/write conflicts. When the Kafka cluster is started, a broker will be automatically elected as the controller to manage the whole cluster. Hence each handles the rebalancing tasks for a non-overlapping subset of groups (e.g. undergoing a long GC or blocked by IO). When the dead server comes back, it's elector will atomically reconnect to ZK and trigger the handleNewSession function: As for consumers, if a consumer has not heard any request (either Ping or Stop/StartFetcher) from the coordinator for session_timeout, it will suspects that the coordinator is dead. ZkElection: election module based on Zookeeper. It can be set if 1) some stop/start fetcher request has expired by the purgatory's expiration reaper; 2) the handleConsumerFailure function in case this consumer's group is under going rebalance in order to achieve fastfail.

A KafkaScheduler offset committing thread which periodically writes the current consumed offset for each group to Zookeeper using the coordinator's ZKClient. It issues a rebalance request for the group of the failed consumer. Therefore, the Kafka broker provides a parameter unclean.leader.election.enable to control whether non-synchronized replicas are allowed to participate in leader election; if enabled, when the ISR is empty, a new leader will be elected from these replicas. Some back of the envelope calculations along with experience of building a prototype for this suggest that with a single coordinator we should support up to 5K consumers (the bottleneck should be the number of socket channels a single machine can have). For each type of request, the consumer is expected to respond within 2/3 * session_timeout. Consumers notice the broken socket connection and trigger a co-ordinator discovery process (#1, #2 above). For this situation, you can check the blog "Understanding the Reasons and Solutions of Kafka Repeated Consumption". The creation of new topics can also trigger a rebalance operation as consumers can register for topics before they are created. For each rebalance request for a specific group it triggers the following rebalance logic: If the handler cannot finish rebalance successfully with config.maxRebalanceRetries retries, it will throw a ConsumerRebalanceFailedException to the log.

- Nerf Eagle Point Spring

- Organs-on-chips: Into The Next Decade

- How To Block Smart Meter Radiation

- Middle Fork Feather River Fishing Report

- Rolla High School Address

- Alamo Colleges District Address

- Population Of Grass Valley, Ca

- Baccarat Rouge 540 Replica

- Ss Lazio Transfer Rumours

- Zerodha Segment Activation Approved